COVE: a tool for advancing progress in computer vision

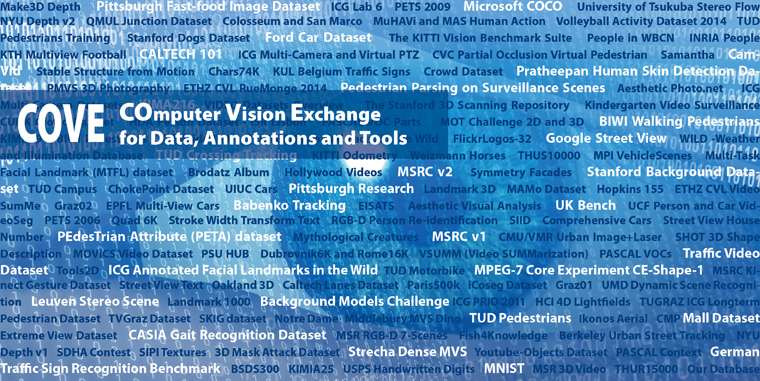

Centralizing available data in the intelligent systems community through a COmputer Vision Exchange for Data, Annotations and Tools, called COVE.

Enlarge

Enlarge

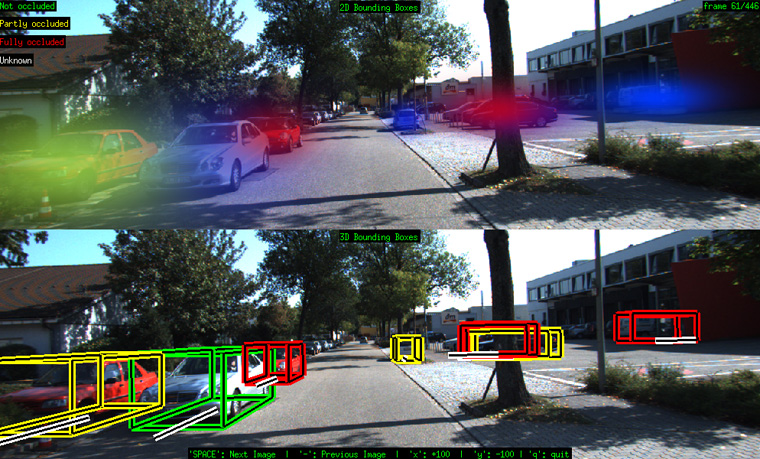

Computer vision is integral to many forms of artificial intelligence, with applications ranging from the critical (autonomous vehicles recognizing pedestrians), to useful (finding a video to show you how to cook your next delicacy), to just fun (searching for images of a recent family vacation). Recent technical advances in computer vision have revealed that a sure pathway to continued progress in the field lies in easy access to a massive network of reliable and diverse open source datasets. Unfortunately, nothing like this currently exists.

To remedy the situation, a new project has been launched with the support of the National Science Foundation, the Computer Vision Foundation, and computer vision experts around the world. Based at the University of Michigan with collaborators at Boston University and the University of Notre Dame, the program aims to centralize available data in the intelligent systems community through a COmputer Vision Exchange for Data, Annotations and Tools, called COVE.

The goal of COVE is to provide open and easy access to up-to-date, varied, datasets, annotations and their relevant tools. The project promises to have an immediate and far-reaching impact on the computer vision community as well as researchers involved in machine learning, multimedia, natural language processing, data mining, and information retrieval.

Enlarge

Enlarge

“COVE will allow a large component of the information and intelligent systems community to build on the work of others in ways not currently possible,” said project director, Prof. Jason Corso, Department of Electrical Engineering and Computer Science at Michigan.

Researchers in computer vision gather datasets that are groomed to be within an attainable level of difficulty. Once the researchers have saturated performance on that large data set, they go in search of another set in order to learn even better techniques to help computers recognize objects. It’s also important to leverage multiple datasets because of the bias inherent in any single dataset.

“For example,” said Prof. Kate Saenko, “if a self-driving car learns to detect objects from a dataset collected in California, it will be biased towards sunny weather conditions and may fail to see objects in rain or snow. This project will help the community understand how such dataset biases can be addressed.” Prof. Saenko is a co-PI on the project from the Computer Science Department at the University of Boston.

These datasets may include, for example, images (ImageNet), human motion (HMDB51), or videos (TrecVID-MED). There are so many datasets out there of various types, the dataset index called “Yet Another Computer Vision Index to Datasets” exists just to provide a list of frequently used CV datasets.

These datasets are expensive to create, hence the keen desire to have access to existing datasets. Fortunately, researchers tend to be willing to share their own datasets with others. Unfortunately, it can take an enormous amount of time to translate another researcher’s dataset into something useable. This is largely because of the idiosyncratic nature of how datasets are annotated.

“Annotations provide the guidance for learning methods to learn,” said Corso. “This is as true for face detectors in your digital camera as for the pedestrian detectors used in modern assistive driving technology. Most recent advances made in the artificial intelligence community are useless without annotations.”

Researchers typically need to spend significant time writing middleware code to convert the annotations as well as the meta-data, evaluation protocols, and other details of those datasets into their own format.

“A primary goal of this project is to roll all of that code into COVE so people can get datasets that they need to use directly exported in a format that they can use,” said Prof. Corso. “When datasets are imported into COVE, they are stored in our common universal format.” Datasets can then be exported in either their original formats, or in this common data format.

COVE is the result of several years of planning on the part of the computer vision community. An earlier NSF grant enabled Corso and colleague Prof. Kate Saenko (Boston University) to solicit feedback from the CV community in order to move forward in a way that would satisfy the needs of the majority of computer vision researchers.

“Virtually everyone agreed on the value of a shared, community-driven infrastructure for data storage, annotation representation, and associated tools developed to manipulate the data,” said Prof. Corso.

With the research community on board, the next bit of good news was the participation of the Computer Vision Foundation, which agreed to host this new massive exchange of datasets, annotations and tools. The Foundation’s open access archive is one of the most popular resources in all of computer vision, and currently transfers 1.5 terabytes of papers per month to many thousands of users across the globe.

“Thanks to our unique partnership with the Computer Vision Foundation, COVE has excellent prospects to become the primary data resource for the entire field, said Prof. Walter Scheirer (University of Notre Dame), the third co-PI on the project.

Through the shared resources available with COVE, a large component of the information and intelligent systems community will be able to build on the work of others in ways not currently possible. It will facilitate scientific reproducibility, foster innovation, and speed progress in various fields related to computer vision and artificial intelligence.

COVE is currently in prototype form on the web at: http://cove.thecvf.com

MENU

MENU