Model helps robots think more like humans when searching for objects

The model is a practical method for robots to look for target items in complex, realistic environments.

Enlarge

Enlarge

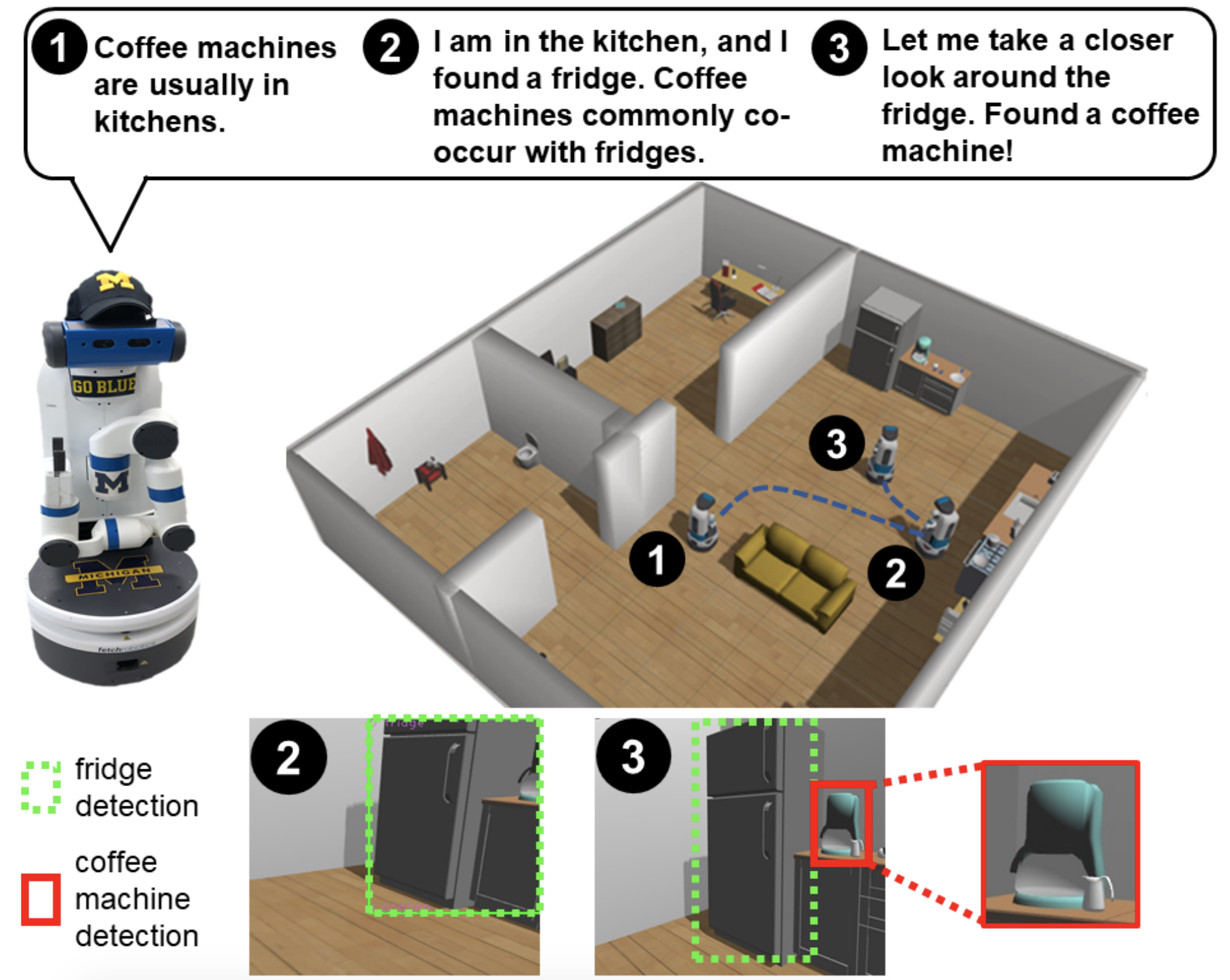

Robots can learn how to find things faster by learning how different objects around the house are related, according to work from the University of Michigan. A new model provides robots with a visual search strategy that can teach them to look for a coffee pot nearby if they’re already in sight of a refrigerator, in one of the paper’s examples.

The work, led by Prof. Chad Jenkins and CSE PhD student Zhen Zeng, was recognized at the 2020 International Conference on Robotics and Automation with a Best Paper Award in Cognitive Robotics.

A common aim of roboticists is to give machines the ability to navigate in realistic settings – for example, the disordered, imperfect households we spend our days in. These settings can be chaotic, with no two exactly the same, and robots in search of specific objects they’ve never seen before will need to pick them out of the noise.

“Being able to efficiently search for objects in an environment is crucial for service robots to autonomously perform tasks,” says Zeng. “We provide a practical method that enables robot to actively search for target objects in a complex environment.”

But homes aren’t total chaos. We organize our spaces around different kinds of activities, and certain groups of items are usually stored or installed in close proximity to each other. Kitchens typically contain our ovens, fridges, microwaves, and other small cooking appliances; bedrooms will have our dressers, beds, and nightstands; and so on.

Zeng and Jenkins have proposed a method to take advantage of these common spatial relationships. Their “SLiM” (Semantic Linking Maps) model associates certain “landmark objects” in the robot’s memory to other related objects, along with data about how the two are typically located spatially. They use SLiM to factor in several features of both the target object and landmark object in order to give robots a more robust understanding of how things can be arrayed in an environment.

“When asked where a target object can be found, humans are able to give hypothetical locations expressed by spatial relations with respect to other objects,” they write. “Robots should be able to reason similarly about objects’ locations.”

Enlarge

Enlarge

The model isn’t simply a hardcoding of how close different objects usually are to one another – look around a room from one day to the next and you’re sure to see enough changes to quickly make that effort futile. Instead, SLiM accounts for uncertainty in an object’s location.

“Previous works assume landmark objects are static, in that they mostly remain where they were last observed,” the authors explain in their paper on the project. To overcome this limitation, the researchers use SLiM to simultaneously maintain the robot’s belief over both the target and landmark object’s location, while also accounting for the possibility that the landmark object may have moved. In order to reason about where objects can go, they make use of a factor graph, a special kind of graph for representing probability distribution, to model the relationships between different objects probabilistically.

With this knowledge of possible object relations in tow, SLiM guides the robot to explore promising regions that may contain either the target or landmark objects. This approach to search is based on previous findings that demonstrate locating a landmark first (indirect search) can be faster than simply looking for the target (direct search). The model used by Jenkins and Zeng is a hybrid of the two.

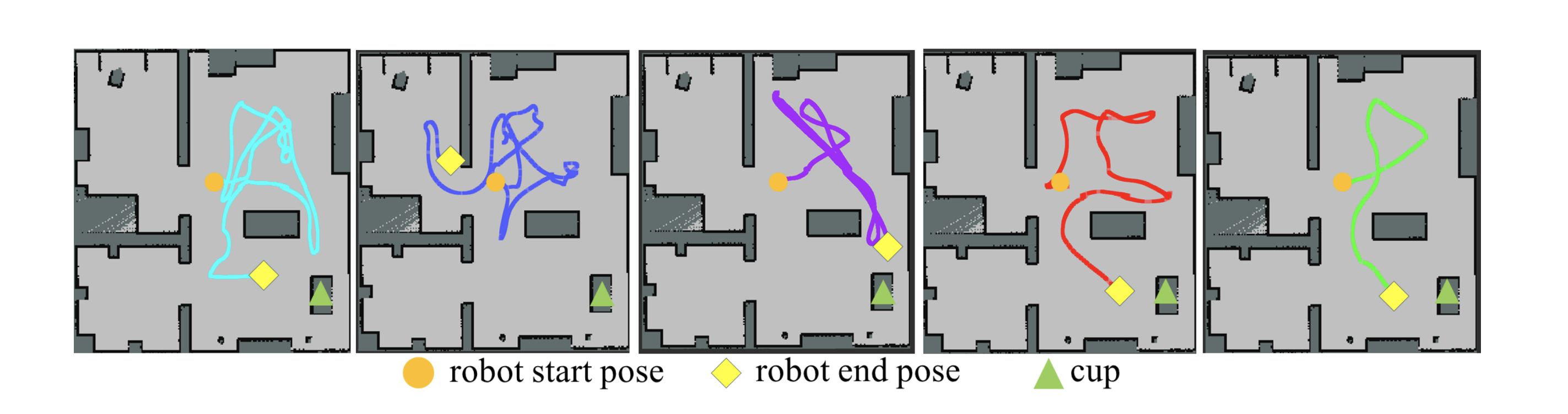

In experiments, the team tested the performance of five different search models in the same simulated environment. One was a naive direct search with no knowledge of objects’ spatial relations, and the remaining four used SLiM’s spatial mapping combined with different search strategies or starting advantages:

- Direct search with a known prior location for the landmark object, but not accounting for any likelihood that it may have been moved

- Direct search with a known prior location for the landmark object that accounts for the likelihood that it may have been moved

- Direct search with no prior knowledge of the landmark’s location

- Hybrid search with no prior knowledge of the landmark’s location

In the end, SLiM combined with hybrid search successfully found target objects with the most direct route and with the least search time in every test.

This work was published in the paper “Semantic Linking Maps for Active Visual Object Search.”

MENU

MENU